Machine learning (ML) is one of the most exciting and rapidly evolving fields in tech today. And when it comes to landing a job in ML, Google is at the top of many engineers’ dream employers list. But let’s be honest—Google’s interview process is notoriously challenging, especially for ML roles. The good news? With the right preparation, you can crack it.

At InterviewNode, we’ve helped countless software engineers prepare for ML interviews at top companies, including Google. In this blog, we’ll walk you through the top 25 frequently asked questions in Google ML interviews, complete with detailed answers. Whether you’re a seasoned ML engineer or just starting out, this guide will give you the tools and confidence you need to ace your interview.

Let’s get started!

Understanding Google’s ML Interview Process

Before diving into the questions, it’s important to understand what you’re up against. Google’s ML interview process typically consists of the following stages:

Technical Phone Screen: A 45-minute call with a Google engineer focusing on coding and basic ML concepts.

Onsite Interviews: These usually include:

Coding Interviews: Focus on data structures, algorithms, and problem-solving.

ML Theory Interviews: Test your understanding of ML concepts, algorithms, and math.

ML System Design Interviews: Assess your ability to design scalable ML systems.

Behavioral Interviews: Evaluate your communication skills and cultural fit.

Hiring Committee Review: Your performance across all rounds is reviewed before a final decision is made.

Each stage requires a different set of skills, so it’s crucial to prepare holistically. Now, let’s dive into the top 25 questions you’re likely to encounter.

Top 25 Frequently Asked Questions in Google ML Interviews

Section 1: Foundational ML Concepts

1. What is the difference between supervised and unsupervised learning?

Answer:Supervised and unsupervised learning are two core paradigms in machine learning. Here’s how they differ:

Supervised Learning: The model is trained on labeled data, meaning each input has a corresponding output. The goal is to learn a mapping from inputs to outputs. Examples include regression (predicting continuous values) and classification (predicting discrete labels).

Example: Predicting house prices based on features like size and location.

Unsupervised Learning: The model is trained on unlabeled data, and the goal is to find hidden patterns or structures. Examples include clustering (grouping similar data points) and dimensionality reduction (reducing the number of features).

Example: Grouping customers based on purchasing behavior.

Why Google Asks This: This question tests your understanding of basic ML concepts, which is essential for any ML role.

2. What is overfitting, and how can you prevent it?

Answer:Overfitting occurs when a model learns the training data too well, capturing noise and outliers, which harms its performance on unseen data. Here’s how to prevent it:

Regularization: Techniques like L1/L2 regularization add a penalty for large coefficients, discouraging the model from fitting the noise.

Cross-Validation: Use techniques like k-fold cross-validation to ensure the model generalizes well.

Simplify the Model: Reduce the number of features or use a simpler algorithm.

Early Stopping: Stop training when performance on a validation set starts to degrade.

Why Google Asks This: Overfitting is a common challenge in ML, and Google wants to see that you understand how to build robust models.

3. Explain the bias-variance tradeoff.

Answer:The bias-variance tradeoff is a fundamental concept in ML that deals with the tradeoff between two sources of error:

Bias: Error due to overly simplistic assumptions in the model. High bias can cause underfitting.

Variance: Error due to the model’s sensitivity to small fluctuations in the training set. High variance can cause overfitting.

The goal is to find a balance where both bias and variance are low, ensuring the model generalizes well to new data.

Why Google Asks This: This question tests your ability to think critically about model performance and optimization.

4. What is the difference between bagging and boosting?

Answer:Bagging and boosting are ensemble techniques used to improve model performance:

Bagging (Bootstrap Aggregating): Trains multiple models independently on random subsets of the data and averages their predictions. Example: Random Forest.

Boosting: Trains models sequentially, with each model correcting the errors of the previous one. Example: Gradient Boosting Machines (GBM) and AdaBoost.

Why Google Asks This: Ensemble methods are widely used in ML, and Google wants to ensure you understand their strengths and weaknesses.

5. How do you handle missing data in a dataset?

Answer:Handling missing data is a critical step in data preprocessing. Here are some common techniques:

Remove Missing Data: Drop rows or columns with missing values (if the dataset is large enough).

Imputation: Replace missing values with a statistic like the mean, median, or mode.

Predictive Models: Use algorithms like k-Nearest Neighbors (k-NN) to predict missing values.

Flag Missing Data: Add a binary flag to indicate whether a value was missing.

Why Google Asks This: Data quality is crucial for building effective ML models, and Google wants to see that you can handle real-world data challenges.

Section 2: Algorithms and Models

6. Explain how linear regression works.

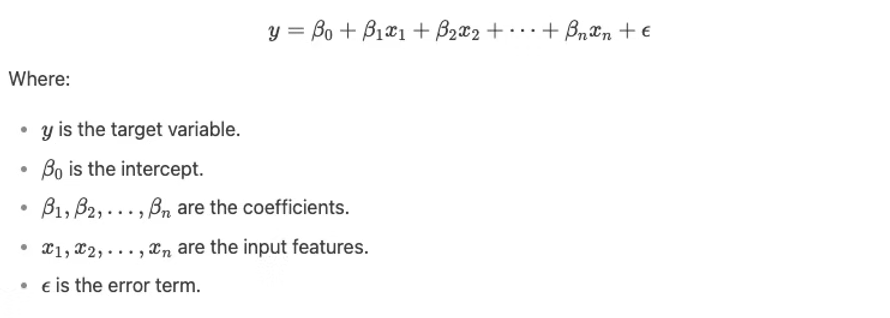

Answer:Linear regression is a supervised learning algorithm used to predict a continuous target variable. It assumes a linear relationship between the input features and the target. The model is represented as:

The goal is to find the coefficients that minimize the error (usually using least squares).

Why Google Asks This: Linear regression is a foundational algorithm, and understanding it is essential for any ML engineer.

7. What is the difference between decision trees and random forests?

Answer:

Decision Trees: A single tree that splits the data based on feature values to make predictions. It’s simple but prone to overfitting.

Random Forests: An ensemble of decision trees trained on random subsets of the data. The final prediction is the average (for regression) or majority vote (for classification) of all trees. Random forests reduce overfitting and improve accuracy.

Why Google Asks This: Random forests are widely used in practice, and Google wants to ensure you understand their advantages over single decision trees.

8. How does a support vector machine (SVM) work?

Answer:SVM is a supervised learning algorithm used for classification and regression. It works by finding the hyperplane that maximizes the margin between two classes. Key concepts include:

Kernel Trick: SVMs can use kernels to transform data into a higher-dimensional space where it’s easier to find a separating hyperplane.

Support Vectors: The data points closest to the hyperplane that influence its position.

Why Google Asks This: SVMs are powerful and versatile, and Google wants to see that you understand their underlying mechanics.

9. What is the difference between k-means and hierarchical clustering?

Answer:

k-Means: Partitions data into k clusters by minimizing the distance between points and their cluster centroids. It requires specifying k in advance.

Hierarchical Clustering: Builds a tree-like structure of clusters, allowing you to explore clusters at different levels of granularity.

Why Google Asks This: Clustering is a key unsupervised learning technique, and Google wants to ensure you understand the differences between popular algorithms.

10. How do you evaluate the performance of a classification model?

Answer:Common evaluation metrics for classification models include:

Accuracy: The percentage of correctly classified instances.

Precision and Recall: Precision measures the accuracy of positive predictions, while recall measures the fraction of positives correctly identified.

F1 Score: The harmonic mean of precision and recall.

ROC-AUC: The area under the receiver operating characteristic curve, which plots the true positive rate against the false positive rate.

Why Google Asks This: Model evaluation is critical, and Google wants to see that you know how to assess performance effectively.

Section 3: Deep Learning

11. What is a neural network, and how does it work?

Answer:A neural network is a computational model inspired by the human brain. It consists of layers of interconnected nodes (neurons) that process input data to produce an output. Here’s how it works:

Input Layer: Receives the input features.

Hidden Layers: Perform transformations on the input data using weights and activation functions.

Output Layer: Produces the final prediction.

During training, the network adjusts its weights using backpropagation to minimize the error between predictions and actual values.

Why Google Asks This: Neural networks are the backbone of deep learning, and Google wants to ensure you understand their fundamentals.

12. What is the difference between CNNs and RNNs?

Answer:

Convolutional Neural Networks (CNNs): Designed for grid-like data (e.g., images). They use convolutional layers to extract spatial features and pooling layers to reduce dimensionality.

Recurrent Neural Networks (RNNs): Designed for sequential data (e.g., time series, text). They use loops to pass information from one step to the next, making them suitable for tasks like language modeling.

Why Google Asks This: CNNs and RNNs are widely used in different domains, and Google wants to see that you understand their applications.

13. What is a transformer, and how does it work?

Answer:Transformers are a type of neural network architecture that revolutionized natural language processing (NLP). Key components include:

Self-Attention Mechanism: Allows the model to weigh the importance of different words in a sentence.

Positional Encoding: Adds information about the position of words in a sequence.

Encoder-Decoder Architecture: Used for tasks like translation, where the encoder processes the input and the decoder generates the output.

Why Google Asks This: Transformers are the foundation of models like BERT and GPT, which are widely used at Google.

14. What is gradient descent, and how does it work?

Answer:Gradient descent is an optimization algorithm used to minimize the loss function in ML models. Here’s how it works:

Initialize the model’s parameters (weights) randomly.

Compute the gradient of the loss function with respect to the parameters.

Update the parameters in the opposite direction of the gradient.

Repeat until convergence.

Variants include stochastic gradient descent (SGD) and mini-batch gradient descent.

Why Google Asks This: Optimization is a core concept in ML, and Google wants to ensure you understand how models learn.

15. What is dropout, and why is it used?

Answer:Dropout is a regularization technique used to prevent overfitting in neural networks. During training, random neurons are "dropped out" (set to zero) with a certain probability. This forces the network to learn robust features that aren’t reliant on specific neurons.

Why Google Asks This: Dropout is a simple yet effective technique, and Google wants to see that you understand its purpose.

Section 4: ML System Design

16. How would you design a recommendation system?

Answer:A recommendation system typically involves the following steps:

Data Collection: Gather user interactions (e.g., clicks, purchases) and item metadata.

Feature Engineering: Create features like user preferences, item popularity, and similarity scores.

Model Selection: Use collaborative filtering, matrix factorization, or deep learning models.

Evaluation: Measure performance using metrics like precision@k or mean average precision (MAP).

Deployment: Serve recommendations in real-time using a scalable infrastructure.

Why Google Asks This: Recommendation systems are a key application of ML, and Google wants to see that you can design scalable solutions.

17. How would you handle imbalanced data in a classification problem?

Answer:Imbalanced data occurs when one class significantly outnumbers the other. Here’s how to handle it:

Resampling: Oversample the minority class or undersample the majority class.

Synthetic Data: Use techniques like SMOTE to generate synthetic samples for the minority class.

Class Weights: Adjust the loss function to give more weight to the minority class.

Evaluation Metrics: Use metrics like F1 score or AUC-PR instead of accuracy.

Why Google Asks This: Imbalanced data is a common challenge, and Google wants to see that you can address it effectively.

18. How would you design a system to detect fraudulent transactions?

Answer:A fraud detection system typically involves:

Data Collection: Gather transaction data and labels (fraudulent/non-fraudulent).

Feature Engineering: Create features like transaction amount, location, and user behavior.

Model Selection: Use algorithms like logistic regression, random forests, or neural networks.

Real-Time Processing: Use stream processing frameworks like Apache Kafka to detect fraud in real-time.

Alert System: Notify users or block transactions flagged as fraudulent.

Why Google Asks This: Fraud detection is a critical application of ML, and Google wants to see that you can design robust systems.

19. How would you scale an ML model to handle millions of users?

Answer:Scaling an ML model involves:

Distributed Training: Use frameworks like TensorFlow or PyTorch to train models on multiple GPUs or machines.

Model Optimization: Use techniques like quantization and pruning to reduce model size.

Inference Serving: Use scalable serving systems like TensorFlow Serving or Kubernetes.

Monitoring: Continuously monitor performance and retrain models as needed.

Why Google Asks This: Scalability is a key concern at Google, and they want to see that you can design systems that handle large-scale data.

20. How would you design a system for real-time object detection?

Answer:A real-time object detection system involves:

Model Selection: Use pre-trained models like YOLO or Faster R-CNN.

Optimization: Optimize the model for inference speed using techniques like quantization.

Hardware Acceleration: Use GPUs or TPUs for faster processing.

Deployment: Serve the model using a real-time inference engine.

Post-Processing: Filter and visualize detected objects in real-time.

Why Google Asks This: Real-time object detection is a challenging problem, and Google wants to see that you can design efficient systems.

Section 5: Coding and Problem-Solving

21. Implement a function to calculate the mean squared error (MSE).

Answer:

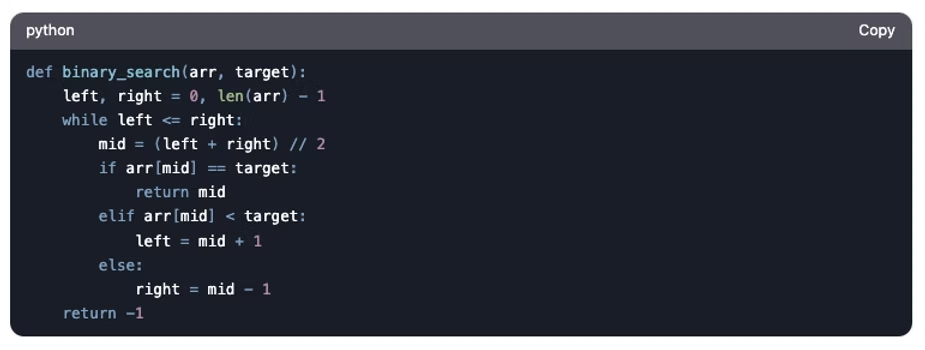

22. Write a function to perform binary search.

Answer:

Why Google Asks This: Binary search is a classic algorithm, and Google wants to see that you can write efficient code.

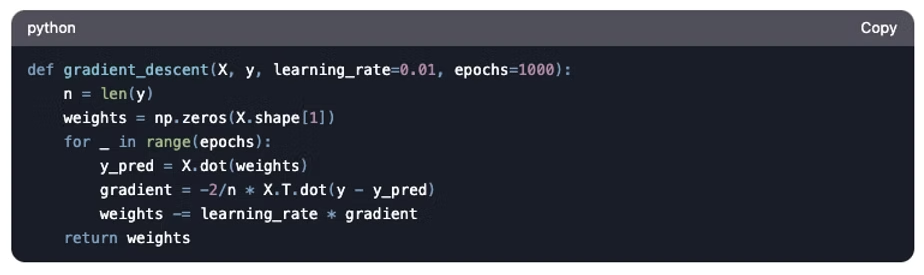

23. Implement gradient descent for linear regression.

Answer:

Why Google Asks This: Implementing gradient descent demonstrates your understanding of optimization.

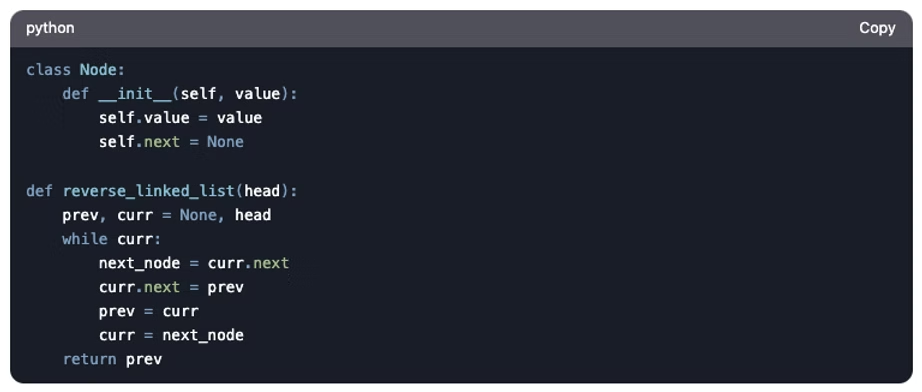

24. Write a function to reverse a linked list.

Answer:

Why Google Asks This: Linked lists are a common data structure, and Google wants to see that you can manipulate them.

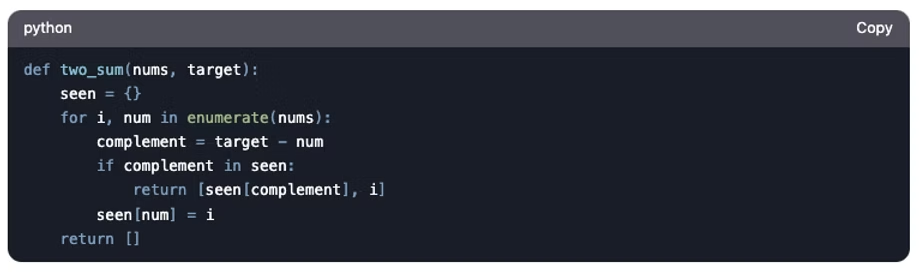

25. Solve the "Two Sum" problem.

Answer:

Why Google Asks This: The "Two Sum" problem tests your ability to solve problems efficiently using hash maps.

Tips for Acing Google ML Interviews

Preparing for a Google ML interview can feel overwhelming, but with the right strategy, you can tackle it with confidence. Here are some tips to help you succeed:

1. Master the Basics

Understand Core Concepts: Make sure you have a solid grasp of foundational ML concepts like supervised vs. unsupervised learning, overfitting, and bias-variance tradeoff.

Practice Coding: Brush up on data structures, algorithms, and problem-solving skills. Platforms like LeetCode and InterviewNode are great for practice.

2. Dive Deep into Algorithms

Know Popular Algorithms: Be prepared to explain and implement algorithms like linear regression, decision trees, SVMs, and neural networks.

Understand Tradeoffs: Be able to discuss the strengths and weaknesses of different algorithms and when to use them.

3. Practice ML System Design

Think Scalably: Google looks for candidates who can design systems that scale to millions of users. Practice designing ML pipelines, recommendation systems, and fraud detection systems.

Focus on Real-World Scenarios: Be ready to discuss how you’d handle challenges like imbalanced data, missing data, and model deployment.

4. Communicate Clearly

Explain Your Thought Process: During the interview, walk the interviewer through your approach to solving problems. Clear communication is key.

Ask Questions: If you’re unsure about a problem, ask clarifying questions. It shows you’re thoughtful and engaged.

5. Leverage Resources

Books: Read books like Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow by Aurélien Géron and Deep Learning by Ian Goodfellow.

Online Courses: Take courses like Andrew Ng’s Machine Learning on Coursera or Deep Learning Specialization.

Practice Platforms: Use InterviewNode to simulate real interview scenarios and get personalized feedback.

6. Stay Calm and Confident

Practice Mock Interviews: Simulate the interview environment to get comfortable with the pressure.

Focus on Learning: Treat the interview as a learning experience rather than a high-stakes test. This mindset can help you stay calm and perform better.

Conclusion

Cracking a Google ML interview is no small feat, but with the right preparation, it’s absolutely achievable. In this blog, we’ve covered the top 25 frequently asked questions in Google ML interviews, along with detailed answers to help you understand the concepts deeply. From foundational ML concepts to advanced system design and coding problems, we’ve got you covered.

Remember, the key to success is consistent practice and a clear understanding of both theory and practical applications. And if you’re looking for a structured way to prepare, InterviewNode is here to help. Our platform offers tailored resources, mock interviews, and expert guidance to help you ace your ML interviews.

So, what are you waiting for? Start preparing today, and take the first step toward landing your dream job at Google. Register for our free webinar to get started!