If you're preparing for a machine learning (ML) interview at Hugging Face, you're likely excited—and maybe a little nervous. Hugging Face is one of the most influential companies in the AI space, especially when it comes to natural language processing (NLP) and transformer models. Their open-source libraries, like transformers, have revolutionized how developers and researchers work with NLP.

But let’s be real: Hugging Face interviews are no walk in the park. They’re designed to test not just your theoretical knowledge but also your practical skills, problem-solving abilities, and passion for AI. To help you ace your interview, we’ve compiled a list of the top 25 frequently asked questions in Hugging Face ML interviews, complete with detailed answers. Whether you're a seasoned ML engineer or just starting out, this guide will give you the edge you need.

Why Hugging Face Interviews Are Unique

Before we dive into the questions, let’s talk about what makes Hugging Face interviews stand out. Unlike traditional ML interviews, Hugging Face places a strong emphasis on NLP, transformers, and open-source contributions. They’re looking for candidates who not only understand the fundamentals of machine learning but also have hands-on experience with their tools and a deep passion for advancing AI.

Here’s what you can expect:

Technical Depth: Be prepared to answer detailed questions about transformer architectures, attention mechanisms, and Hugging Face’s libraries.

Coding Challenges: You’ll likely be asked to write code to preprocess data, fine-tune models, or implement specific NLP tasks.

Behavioral Questions: Hugging Face values collaboration and innovation, so expect questions about your experience working on teams and solving complex problems.

Open-Source Contributions: If you’ve contributed to open-source projects (especially Hugging Face’s repositories), make sure to highlight that—it’s a huge plus.

Now, let’s get to the good stuff: the top 25 questions you need to prepare for.

Top 25 Frequently Asked Questions in Hugging Face ML Interviews

Section 1: Foundational ML Concepts

1. What is the difference between supervised and unsupervised learning?

Answer:Supervised learning involves training a model on labeled data, where the input features are mapped to known output labels. The goal is to learn a mapping function that can predict the output for new, unseen data. Common examples include classification and regression tasks.

Unsupervised learning, on the other hand, deals with unlabeled data. The model tries to find hidden patterns or structures in the data without any guidance. Clustering and dimensionality reduction are typical unsupervised learning tasks.

Why this matters for Hugging Face:Hugging Face’s models often use supervised learning for tasks like text classification, but unsupervised learning techniques (like pretraining on large text corpora) are also crucial for building powerful NLP models.

2. Explain the concept of overfitting and how to prevent it.

Answer:Overfitting occurs when a model learns the training data too well, capturing noise and outliers instead of generalizing to new data. This results in poor performance on unseen data.

To prevent overfitting:

Use techniques like cross-validation.

Regularize the model using methods like L1/L2 regularization.

Employ dropout in neural networks.

Simplify the model architecture or reduce the number of features.

Use data augmentation to increase the diversity of the training data.

Why this matters for Hugging Face:Overfitting is a common challenge when fine-tuning large transformer models, so understanding how to mitigate it is essential.

3. What are the key differences between traditional ML models and deep learning models?

Answer:Traditional ML models (like linear regression, decision trees, or SVMs) rely on hand-engineered features and are often simpler and faster to train. They work well for structured data but struggle with unstructured data like text or images.

Deep learning models, on the other hand, automatically learn features from raw data using multiple layers of neural networks. They excel at handling unstructured data and can capture complex patterns, but they require large amounts of data and computational resources.

Why this matters for Hugging Face:Hugging Face’s transformer models are a prime example of deep learning’s power in NLP, so understanding this distinction is crucial.

Section 2: NLP and Transformers

4. What are transformers, and why are they important in NLP?

Answer:Transformers are a type of neural network architecture introduced in the paper "Attention is All You Need" by Vaswani et al. They revolutionized NLP by replacing traditional recurrent neural networks (RNNs) with self-attention mechanisms, which allow the model to focus on different parts of the input sequence when making predictions.

Transformers are important because:

They handle long-range dependencies in text better than RNNs.

They are highly parallelizable, making them faster to train.

They form the backbone of state-of-the-art models like BERT, GPT, and T5.

Why this matters for Hugging Face:Hugging Face’s entire ecosystem is built around transformer models, so you need to understand them inside and out.

5. Explain the architecture of the Transformer model.

Answer:The Transformer model consists of two main components: the encoder and the decoder. Each component is made up of multiple layers of self-attention and feed-forward neural networks.

Self-Attention Mechanism: This allows the model to weigh the importance of different words in a sentence relative to each other. For example, in the sentence "The cat sat on the mat," the word "cat" might attend more to "sat" and "mat."

Positional Encoding: Since transformers don’t have a built-in sense of word order, positional encodings are added to the input embeddings to provide information about the position of each word.

Feed-Forward Networks: After self-attention, the output is passed through a feed-forward neural network for further processing.

Why this matters for Hugging Face:Understanding the Transformer architecture is fundamental to working with Hugging Face’s models.

6. What is the difference between BERT and GPT?

Answer:BERT (Bidirectional Encoder Representations from Transformers) and GPT (Generative Pre-trained Transformer) are both transformer-based models, but they differ in their architecture and use cases:

BERT is bidirectional, meaning it looks at both the left and right context of a word simultaneously. It’s primarily used for tasks like text classification, question answering, and named entity recognition.

GPT is unidirectional, meaning it processes text from left to right. It’s designed for generative tasks like text completion, summarization, and dialogue generation.

Why this matters for Hugging Face:Hugging Face’s model hub includes both BERT and GPT variants, so knowing their differences is key.

7. How does the attention mechanism work in transformers?

Answer:The attention mechanism allows the model to focus on different parts of the input sequence when making predictions. It works by computing a weighted sum of all input embeddings, where the weights are determined by the relevance of each input to the current word being processed.

For example, in the sentence "The cat sat on the mat," when processing the word "sat," the model might assign higher weights to "cat" and "mat" because they are more relevant to the action of sitting.

Why this matters for Hugging Face:Attention mechanisms are at the core of transformer models, so you need to understand how they work.

8. What are some common applications of Hugging Face’s transformer models?

Answer:Hugging Face’s transformer models are used for a wide range of NLP tasks, including:

Text Classification: Sentiment analysis, spam detection, etc.

Named Entity Recognition (NER): Identifying entities like names, dates, and locations in text.

Question Answering: Extracting answers from a given context.

Text Generation: Creating coherent and contextually relevant text.

Machine Translation: Translating text from one language to another.

Why this matters for Hugging Face:You’ll likely be asked to discuss real-world applications of their models during the interview.

Section 3: Hugging Face Specific Questions

9. What is Hugging Face’s transformers library, and how does it simplify NLP tasks?

Answer:Hugging Face’s transformers library is an open-source Python library that provides pre-trained transformer models for a wide range of NLP tasks. It simplifies NLP by:

Offering easy-to-use APIs for loading and fine-tuning models.

Supporting a wide variety of models, including BERT, GPT, T5, and more.

Providing tools for tokenization, model evaluation, and deployment.

For example, you can load a pre-trained BERT model and fine-tune it for sentiment analysis in just a few lines of code.

Why this matters for Hugging Face:This is the bread and butter of Hugging Face’s offerings, so you need to be familiar with the library.

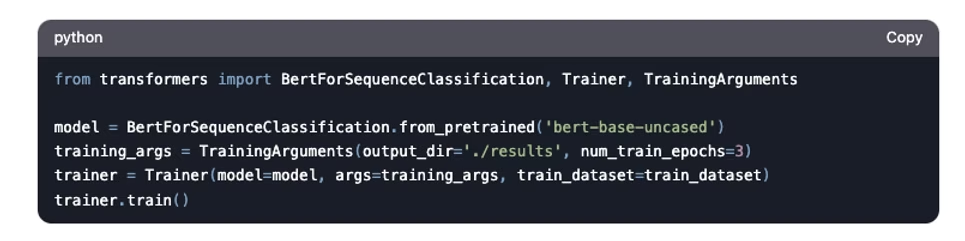

10. How do you fine-tune a pre-trained model using Hugging Face?

Answer:Fine-tuning a pre-trained model involves adapting it to a specific task using a smaller, task-specific dataset. Here’s how you can do it with Hugging Face:

Load a pre-trained model and tokenizer.

Prepare your dataset and tokenize it.

Define a training loop using a framework like PyTorch or TensorFlow.

Train the model on your dataset, adjusting hyperparameters as needed.

Evaluate the model on a validation set.

Why this matters for Hugging Face:Fine-tuning is a core skill for working with Hugging Face’s models.

11. What are some of the most popular models available in Hugging Face’s model hub?

Answer:Hugging Face’s model hub hosts thousands of pre-trained models, including:

BERT: For tasks like text classification and question answering.

GPT: For text generation and completion.

T5: A versatile model for text-to-text tasks.

RoBERTa: An optimized version of BERT.

DistilBERT: A smaller, faster version of BERT.

Why this matters for Hugging Face:You should be familiar with these models and their use cases.

12. How does Hugging Face handle model deployment and serving?

Answer:Hugging Face provides tools like Inference API and Model Hub to simplify model deployment. You can deploy models as REST APIs, integrate them into applications, or share them with the community. They also support ONNX and TensorFlow Serving for production deployment.

Why this matters for Hugging Face:Deployment is a critical part of the ML lifecycle, and Hugging Face makes it easy.

Section 4: Practical Coding and Problem-Solving

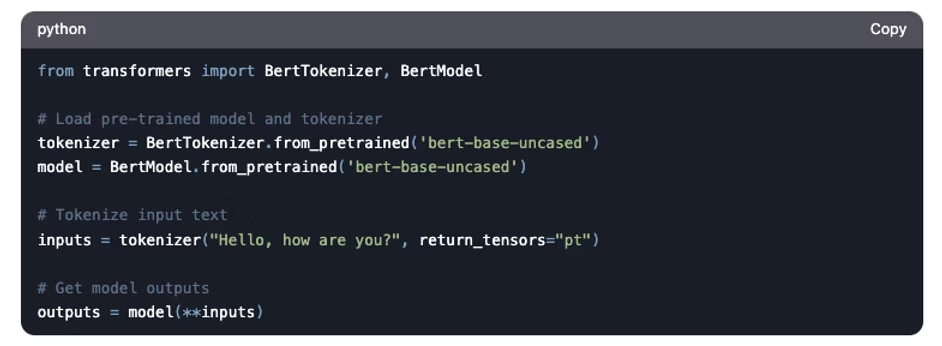

13. Write a Python script to load a pre-trained BERT model using Hugging Face.

Answer:Here’s how you can load a pre-trained BERT model:

Why this matters for Hugging Face:This is a basic but essential skill for working with Hugging Face’s tools.

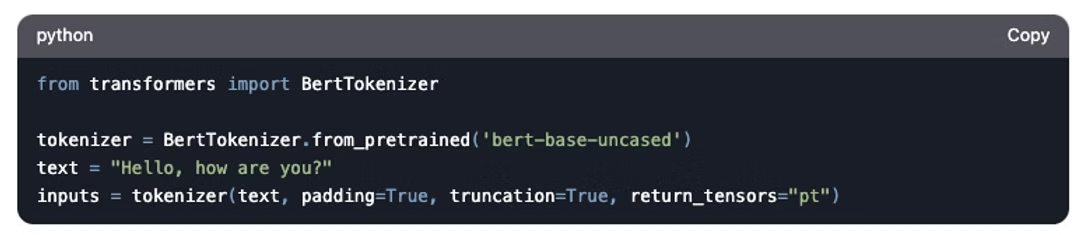

14. How would you preprocess text data for a Hugging Face model?

Answer:Preprocessing text data typically involves:

Tokenization: Splitting text into tokens (words, subwords, or characters).

Padding/Truncation: Ensuring all sequences are the same length.

Encoding: Converting tokens into numerical IDs.

For example:

Why this matters for Hugging Face:Proper preprocessing is crucial for model performance.

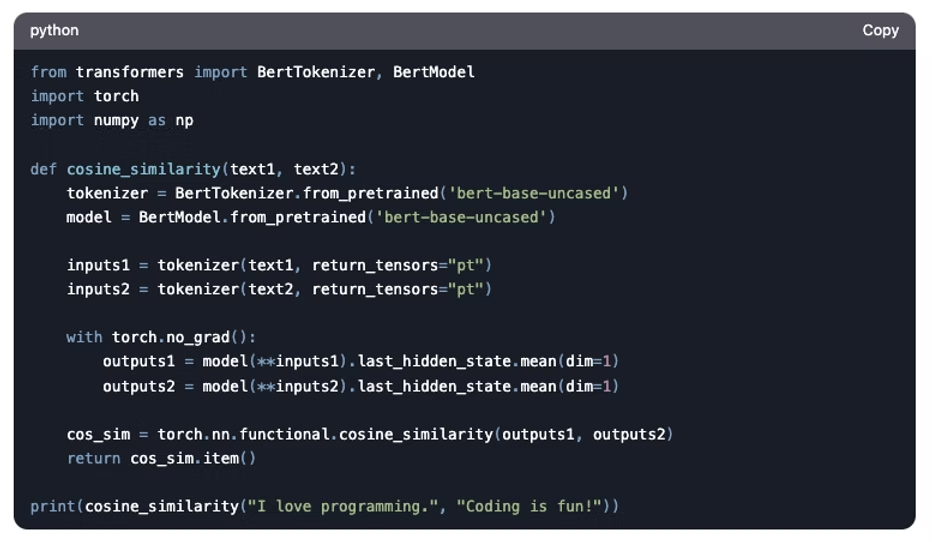

15. Write a function to calculate the cosine similarity between two sentences using Hugging Face embeddings.

Answer:Here’s how you can calculate cosine similarity:

Why this matters for Hugging Face:This demonstrates your ability to work with embeddings and similarity metrics.

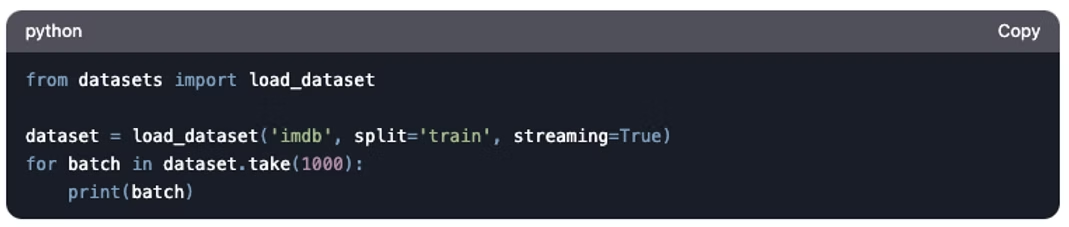

16. How would you handle a large dataset that doesn’t fit into memory when using Hugging Face models?

Answer:To handle large datasets:

Use datasets library from Hugging Face, which supports lazy loading and streaming.

Process data in batches.

Use tools like Apache Arrow for efficient data storage and retrieval.

For example:

Why this matters for Hugging Face:Handling large datasets is a common challenge in NLP.

Section 5: Advanced ML and NLP Concepts

17. What is transfer learning, and how is it used in NLP?

Answer:Transfer learning involves taking a model trained on one task and adapting it to a different but related task. In NLP, this typically means taking a pre-trained language model (like BERT or GPT) and fine-tuning it on a specific dataset for tasks like sentiment analysis or named entity recognition.

Transfer learning is powerful because:

It reduces the need for large labeled datasets.

It leverages knowledge learned from vast amounts of general text data.

It speeds up training and improves performance.

Why this matters for Hugging Face:Hugging Face’s models are built on the principle of transfer learning, so you need to understand it thoroughly.

18. Explain the concept of zero-shot learning and how Hugging Face implements it.

Answer:Zero-shot learning allows a model to perform tasks it has never explicitly been trained on. For example, a model trained on general text data can classify text into categories it has never seen before.

Hugging Face implements zero-shot learning using models like BART and T5, which can generalize to new tasks by leveraging their understanding of language.

Why this matters for Hugging Face:Zero-shot learning is a cutting-edge technique in NLP.

19. What are some challenges in deploying NLP models in production?

Answer:Challenges include:

Latency: Ensuring models respond quickly.

Scalability: Handling large volumes of requests.

Model Size: Compressing large models for deployment.

Data Drift: Ensuring models perform well on new data.

Why this matters for Hugging Face:Deployment is a key part of Hugging Face’s offerings.

20. How do you evaluate the performance of an NLP model?

Answer:Common evaluation metrics include:

Accuracy: For classification tasks.

F1 Score: For imbalanced datasets.

BLEU/ROUGE: For text generation tasks.

Perplexity: For language models.

Why this matters for Hugging Face:Evaluation is critical for ensuring model quality.

Section 6: Behavioral and Open-Ended Questions

21. Describe a time when you had to debug a complex ML model. What was the issue, and how did you resolve it?

Answer:This is your chance to showcase your problem-solving skills. Be specific about the issue (e.g., overfitting, data leakage, or a bug in the code) and walk through your thought process and steps to resolve it. Highlight any collaboration with teammates or innovative solutions you came up with.

Why this matters for Hugging Face:Hugging Face values candidates who can tackle complex problems and work well in teams.

22. How do you stay updated with the latest advancements in NLP and ML?

Answer:Mention resources like research papers (e.g., arXiv), blogs (e.g., Hugging Face’s blog), conferences (e.g., NeurIPS, ACL), and online communities (e.g., Twitter, Reddit).

Why this matters for Hugging Face:Staying updated shows your passion for the field.

23. What are some ethical considerations when deploying NLP models?

Answer:Ethical considerations include:

Bias: Ensuring models don’t perpetuate harmful stereotypes.

Privacy: Protecting user data.

Transparency: Making model decisions interpretable.

Why this matters for Hugging Face:Ethics is a growing concern in AI.

24. How would you explain a complex ML concept to a non-technical stakeholder?

Answer:Use analogies and simple language. For example, explain overfitting as "memorizing the answers instead of understanding the material."

Why this matters for Hugging Face:Communication skills are crucial for collaboration.

25. What are your thoughts on the future of NLP and AI?

Answer:Discuss trends like multimodal models, AI ethics, and the democratization of AI through open-source tools like Hugging Face.

Why this matters for Hugging Face:Hugging Face is at the forefront of these trends.

Tips for Acing Hugging Face ML Interviews

Master the Basics: Ensure you have a strong grasp of ML and NLP fundamentals.

Practice Coding: Work on coding challenges related to Hugging Face’s libraries.

Contribute to Open Source: If possible, contribute to Hugging Face’s repositories or other open-source projects.

Stay Updated: Follow the latest research in NLP and transformers.

Prepare for Behavioral Questions: Be ready to discuss your past experiences and how you’ve overcome challenges.

Conclusion

Preparing for a Hugging Face ML interview can be challenging, but with the right preparation, you can stand out from the crowd. By mastering the questions and concepts covered in this blog, you’ll be well on your way to acing your interview and landing your dream job in AI.

Remember, InterviewNode is here to help you every step of the way. Check out our resources for more tips and practice questions tailored to ML interviews. Good luck!

FAQs

Q: How long does it take to prepare for a Hugging Face ML interview?A: It depends on your background, but we recommend at least 4-6 weeks of focused preparation.

Q: Are Hugging Face interviews more focused on theory or coding?A: They’re a mix of both, with a strong emphasis on practical coding and problem-solving.

Q: Can I use Hugging Face’s transformers library in my interview?A: Absolutely! Familiarity with the library is a big plus.

Good luck with your Hugging Face ML interview! Register for our free webinar to know more about how Interview Node could help you succeed.