1. Introduction

If you’re reading this, chances are you’re dreaming of landing a machine learning role at NVIDIA—the company that’s powering the AI revolution. From self-driving cars to cutting-edge deep learning frameworks, NVIDIA is at the forefront of innovation. But let’s face it: cracking an NVIDIA ML interview is no walk in the park. With thousands of talented engineers vying for a spot, you need to be at the top of your game.

That’s where we come in. At InterviewNode, we’ve helped countless software engineers ace their machine learning interviews at top companies like NVIDIA. In this blog, we’re sharing the top 25 frequently asked questions in NVIDIA ML interviews, complete with detailed answers and expert tips. Whether you’re a seasoned ML engineer or just starting out, this guide will give you the edge you need to stand out.

By the end of this blog, you’ll not only know what to expect in an NVIDIA ML interview but also how to prepare effectively using InterviewNode’s proven strategies and resources. Let’s get started!

2. Why NVIDIA?

Before we dive into the questions, let’s talk about why NVIDIA is such a coveted place to work. NVIDIA isn’t just a tech company—it’s a pioneer in AI and machine learning. Their GPUs (Graphics Processing Units) have become the backbone of modern AI, enabling breakthroughs in fields like computer vision, natural language processing, and autonomous systems.

NVIDIA’s Role in AI/ML

GPUs for AI: NVIDIA’s GPUs are the gold standard for training deep learning models. Their CUDA platform allows developers to harness the power of parallel computing, making it possible to train models faster and more efficiently.

Frameworks and Libraries: NVIDIA has developed tools like cuDNN, TensorRT, and NVIDIA DALI that are widely used in the AI community.

Research and Innovation: From generative AI to robotics, NVIDIA is constantly pushing the boundaries of what’s possible with AI.

Why Work at NVIDIA?

Cutting-Edge Projects: Work on projects that are shaping the future of AI, from autonomous vehicles to AI-powered healthcare.

World-Class Talent: Collaborate with some of the brightest minds in the industry.

Career Growth: NVIDIA offers unparalleled opportunities for learning and advancement.

NVIDIA’s Interview Process

NVIDIA’s interview process is rigorous and typically includes:

Technical Screening: A coding and ML fundamentals assessment.

Onsite Interviews: Deep dives into machine learning, system design, and behavioral questions.

Team Fit: Discussions with potential team members to assess cultural fit.

Now that you know why NVIDIA is such a sought-after employer, let’s talk about how to prepare for their ML interviews.

3. How to Prepare for NVIDIA ML Interviews

Preparing for an NVIDIA ML interview requires a combination of technical expertise, problem-solving skills, and strategic preparation. Here’s a step-by-step guide to help you get started:

1. Understand the Job Role

NVIDIA hires for various ML roles, including Research Scientists, ML Engineers, and AI Software Developers. Tailor your preparation based on the specific role you’re targeting.

2. Brush Up on Fundamentals

Machine Learning: Be solid on concepts like supervised vs. unsupervised learning, bias-variance tradeoff, and evaluation metrics.

Deep Learning: Understand neural networks, backpropagation, and popular architectures like CNNs and RNNs.

Mathematics: Linear algebra, calculus, and probability are essential for ML roles.

3. Practice Coding and Problem-Solving

NVIDIA places a strong emphasis on coding skills. Be prepared to solve algorithmic problems and write efficient code.

Familiarize yourself with CUDA programming and parallel computing concepts.

4. Learn NVIDIA’s Tech Stack

NVIDIA has developed a suite of tools and libraries for AI/ML. Some key ones to know include:

CUDA: For parallel computing on GPUs.

TensorRT: For optimizing deep learning models for inference.

cuDNN: A GPU-accelerated library for deep neural networks.

5. Leverage InterviewNode

At InterviewNode, we specialize in helping candidates like you prepare for top ML interviews. Our platform offers:

Personalized Mock Interviews: Simulate real NVIDIA interviews with expert feedback.

Curated Question Bank: Practice with NVIDIA-specific ML interview questions.

Expert Guidance: Learn from mentors who’ve cracked top ML interviews.

Comprehensive Resources: Study guides, tutorials, and more to help you master the skills you need.

Now that you know how to prepare, let’s dive into the top 25 frequently asked questions in NVIDIA ML interviews.

4. How InterviewNode Can Help You Prepare for NVIDIA ML Interviews

At InterviewNode, we understand that preparing for an NVIDIA ML interview can feel overwhelming. That’s why we’ve built a platform that provides everything you need to succeed. Here’s how we can help:

1. Personalized Mock Interviews

Simulate Real Interviews: Practice with mock interviews designed to mimic NVIDIA’s interview process.

Expert Feedback: Get detailed feedback on your performance, including areas for improvement.

2. Curated Question Bank

NVIDIA-Specific Questions: Access a library of questions frequently asked in NVIDIA ML interviews.

Categorized by Difficulty: Practice questions tailored to your skill level.

3. Expert Guidance

Mentorship: Learn from mentors who’ve successfully cracked NVIDIA interviews.

Tips and Strategies: Get insider tips on NVIDIA’s interview process and expectations.

4. Comprehensive Learning Resources

Study Guides: Master ML fundamentals, deep learning, and CUDA programming.

Tutorials: Learn how to use NVIDIA’s tech stack, including TensorRT and cuDNN.

5. Community Support

Join a Community: Connect with other candidates preparing for NVIDIA interviews.

Group Discussions: Participate in discussions and peer reviews to enhance your learning.

6. Success Stories

Real-Life Examples: Read testimonials from candidates who aced their NVIDIA interviews with InterviewNode’s help.

With InterviewNode by your side, you’ll be well-equipped to tackle NVIDIA’s ML interviews with

5. Top 25 Frequently Asked Questions in NVIDIA ML Interviews

Category 1: Machine Learning Fundamentals

Explain the bias-variance tradeoff.

Why This Question?: This tests your understanding of model performance and generalization, which is critical for building robust ML systems.

Detailed Answer:

Bias refers to errors due to overly simplistic assumptions in the model. A high-bias model is too simple and may underfit the data, failing to capture important patterns. For example, using a linear model for a non-linear problem.

Variance refers to errors due to the model’s sensitivity to small fluctuations in the training data. A high-variance model is too complex and may overfit the data, capturing noise instead of the underlying pattern.

The tradeoff involves balancing bias and variance to minimize the total error. A good model has low bias (fits the training data well) and low variance (generalizes well to unseen data).

Pro Tip: Use techniques like cross-validation to evaluate model performance and regularization (e.g., L1/L2) to control overfitting.

What is overfitting, and how can you prevent it?

Why This Question?: Overfitting is a common challenge in ML, and NVIDIA wants to see if you can address it effectively.

Detailed Answer:

Overfitting occurs when a model learns the training data too well, including noise and outliers, leading to poor performance on unseen data. For example, a deep neural network with too many layers might memorize the training data instead of generalizing.

Prevention Techniques:

More Data: Increasing the size of the training dataset can help the model generalize better.

Regularization: Techniques like L1/L2 regularization penalize large weights, discouraging overfitting.

Simpler Models: Use fewer layers or parameters to reduce model complexity.

Dropout: Randomly drop neurons during training to prevent co-adaptation.

Early Stopping: Stop training when validation performance stops improving.

Pro Tip: NVIDIA’s TensorRT can help optimize models for inference, reducing overfitting by improving generalization.

What is the difference between supervised and unsupervised learning?

Why This Question?: This tests your foundational knowledge of ML paradigms.

Detailed Answer:

Supervised Learning: The model is trained on labeled data, where each input has a corresponding output. The goal is to learn a mapping from inputs to outputs. Examples include:

Classification: Predicting categories (e.g., spam vs. not spam).

Regression: Predicting continuous values (e.g., house prices).

Unsupervised Learning: The model is trained on unlabeled data, and the goal is to find patterns or structures. Examples include:

Clustering: Grouping similar data points (e.g., customer segmentation).

Dimensionality Reduction: Reducing the number of features (e.g., PCA).

Pro Tip: Supervised learning is more common in industry applications, while unsupervised learning is often used for exploratory data analysis.

How do you handle missing data in a dataset?

Why This Question?: Data preprocessing is critical for ML, and NVIDIA wants to see if you can handle real-world data challenges.

Detailed Answer:

Options for Handling Missing Data:

Remove Missing Values: If the missing data is minimal, you can drop rows or columns with missing values.

Imputation: Replace missing values with statistical measures like mean, median, or mode.

Predictive Imputation: Use ML models to predict missing values based on other features.

Use Algorithms That Support Missing Data: Some algorithms, like XGBoost, can handle missing values natively.

Pro Tip: Always analyze the pattern of missing data (e.g., random or systematic) before choosing a strategy.

What is cross-validation, and why is it important?

Why This Question?: This tests your understanding of model evaluation techniques.

Detailed Answer:

Cross-Validation is a technique for evaluating ML models by splitting the data into multiple subsets (folds). The model is trained on some folds and validated on the remaining fold. This process is repeated for each fold.

Why It’s Important:

It provides a more robust estimate of model performance compared to a single train-test split.

It helps detect overfitting by evaluating the model on multiple subsets of the data.

Common Methods:

k-Fold Cross-Validation: Split the data into k folds and rotate the validation fold.

Stratified k-Fold: Ensures each fold has the same proportion of target classes.

Pro Tip: Use k-fold cross-validation for small datasets and stratified k-fold for imbalanced datasets.

Category 2: Deep Learning and Neural Networks

How does a convolutional neural network (CNN) work?

Why This Question?: CNNs are widely used in computer vision, a key area for NVIDIA.

Detailed Answer:

CNNs are designed to process grid-like data, such as images. They consist of:

Convolutional Layers: Apply filters (kernels) to extract features like edges, textures, and patterns. Each filter slides over the input, performing element-wise multiplication and summation.

Pooling Layers: Reduce spatial dimensions (e.g., max pooling selects the maximum value in a window).

Fully Connected Layers: Combine features for classification or regression.

CNNs leverage local spatial correlations in data, making them highly efficient for tasks like image recognition.

Pro Tip: NVIDIA’s cuDNN library accelerates CNN operations on GPUs, so familiarize yourself with it.

What is backpropagation, and why is it important?

Why This Question?: Backpropagation is the foundation of training neural networks.

Detailed Answer:

Backpropagation is an algorithm used to train neural networks by minimizing the error between predicted and actual outputs. It works in two phases:

Forward Pass: Compute the output and calculate the loss (difference between prediction and target).

Backward Pass: Propagate the loss backward through the network, computing gradients for each weight using the chain rule.

Why It’s Important: It enables neural networks to learn from data and improve over time by adjusting weights to minimize error.

Pro Tip: NVIDIA GPUs are optimized for backpropagation, so understanding parallel computing can give you an edge.

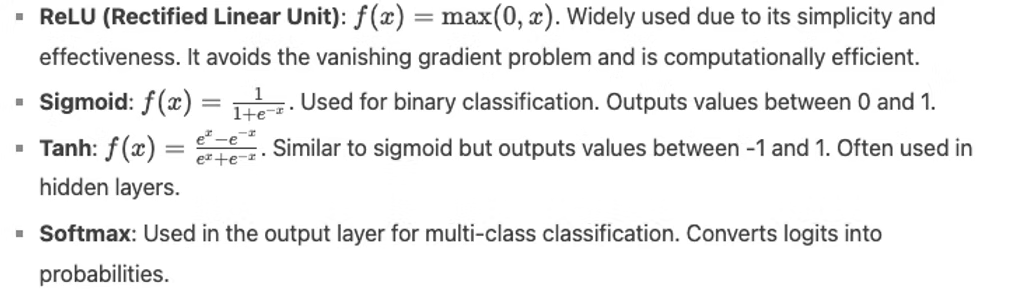

What are some common activation functions, and when would you use them?

Why This Question?: Activation functions introduce non-linearity into neural networks.

Detailed Answer:

Pro Tip: ReLU is the default choice for most deep learning models due to its computational efficiency.

Category 2: Deep Learning and Neural Networks

What is a vanishing gradient, and how can you address it?

Why This Question?: This tests your understanding of deep learning challenges and solutions.

Detailed Answer:

Vanishing Gradient Problem: During backpropagation, gradients can become very small as they propagate backward through the network. This slows down or stops learning because weights are updated minimally.

Causes:

Activation functions like sigmoid or tanh squash inputs into a small range, leading to small gradients.

Deep networks with many layers amplify this issue.

Solutions:

ReLU Activation: ReLU avoids the vanishing gradient problem because its gradient is 1 for positive inputs.

Batch Normalization: Normalizes layer inputs to stabilize training.

Residual Networks (ResNets): Use skip connections to allow gradients to flow directly through the network.

Pro Tip: NVIDIA’s frameworks like TensorRT can help mitigate this issue by optimizing gradient computations.

What is transfer learning, and when would you use it?

Why This Question?: Transfer learning is a key technique in deep learning, especially for NVIDIA’s applications.

Detailed Answer:

Transfer Learning involves using a pre-trained model (trained on a large dataset) and fine-tuning it for a new task. For example, using a model trained on ImageNet for a custom image classification task.

When to Use It:

When you have limited data for the new task.

When the new task is similar to the original task (e.g., both involve image recognition).

Steps:

Remove the final layer of the pre-trained model.

Add a new layer for the new task.

Fine-tune the model on the new dataset.

Example: Using a pre-trained ResNet model for medical image analysis.

Pro Tip: NVIDIA’s NGC catalog offers pre-trained models for transfer learning, saving you time and resources.

Category 3: Programming and Algorithms

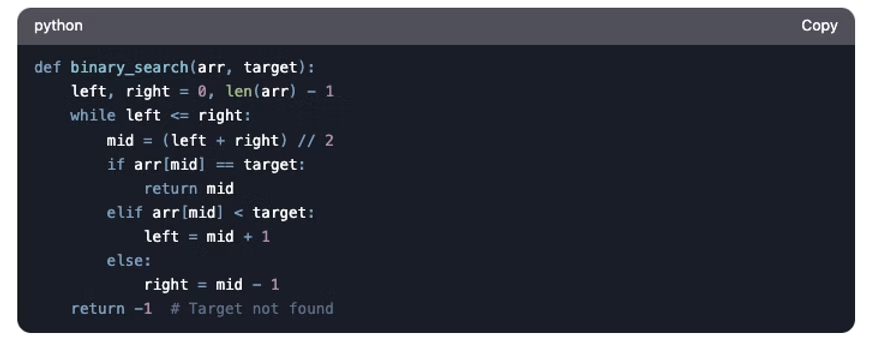

Write a Python function to implement a binary search.

Why This Question?: This tests your coding and problem-solving skills, which are critical for NVIDIA roles.

Detailed Answer:

Explanation:

The function takes a sorted array arr and a target value.

It uses two pointers, left and right, to narrow down the search range.

The middle element (mid) is compared to the target. If it matches, the index is returned. If not, the search range is halved.

The process repeats until the target is found or the search range is exhausted.

Time Complexity: O(log n), where n is the size of the array.

Pro Tip: Optimize your code for performance, especially when working with large datasets on NVIDIA GPUs.

12. How would you parallelize a matrix multiplication algorithm?

Why This Question?: Parallel computing is central to NVIDIA’s technology.

Detailed Answer:

Matrix Multiplication involves multiplying two matrices to produce a third matrix. For large matrices, this can be computationally expensive.

Parallelization:

Divide the task into smaller sub-tasks that can be executed concurrently on GPU cores.

Use CUDA to write parallel code for NVIDIA GPUs.

Example:

Each thread computes one element of the resulting matrix.

Use shared memory to store intermediate results and reduce global memory access.

Code Snippet (CUDA pseudocode):

Pro Tip: Familiarize yourself with NVIDIA’s cuBLAS library, which provides optimized routines for matrix operations.

13. Explain the time complexity of common sorting algorithms.

Why This Question?: This tests your understanding of algorithms and efficiency.

Detailed Answer:

QuickSort:

Average Case: O(n log n).

Worst Case: O(n^2) (occurs when the pivot is poorly chosen).

How It Works: Divides the array into smaller sub-arrays using a pivot and recursively sorts them.

MergeSort:

Time Complexity: O(n log n) in all cases.

How It Works: Divides the array into two halves, sorts them recursively, and merges the sorted halves.

BubbleSort:

Time Complexity: O(n^2).

How It Works: Repeatedly swaps adjacent elements if they are in the wrong order.

Pro Tip: Use efficient algorithms like QuickSort or MergeSort for large datasets on GPUs.

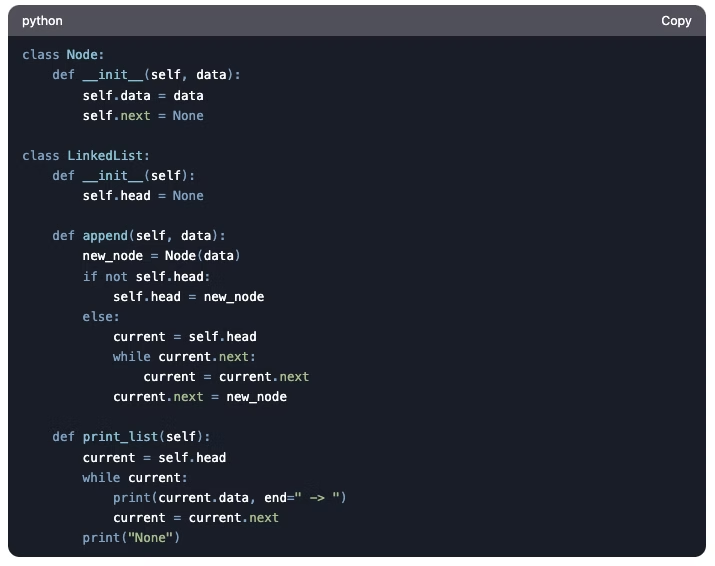

Why This Question?: This tests your understanding of data structures.

Detailed Answer:

Explanation:

A Node represents an element in the linked list, containing data and a pointer to the next node.

The LinkedList class manages the list, with methods like append to add elements and print_list to display the list.

Pro Tip: Practice implementing other data structures like trees and graphs.

15. What is dynamic programming, and how is it used?

Why This Question?: This tests your problem-solving approach.

Detailed Answer:

Dynamic Programming (DP) is a method for solving complex problems by breaking them into smaller subproblems and storing their solutions to avoid redundant calculations.

Key Characteristics:

Optimal Substructure: The optimal solution to the problem can be constructed from optimal solutions of subproblems.

Overlapping Subproblems: The problem can be broken down into subproblems that are reused multiple times.

Example: The Fibonacci sequence.

Without DP: Exponential time complexity due to redundant calculations.

With DP: Store intermediate results in a table to achieve O(n) time complexity.

Pro Tip: Use DP for problems like the knapsack problem, longest common subsequence, or matrix chain multiplication.

Category 4: System Design and Optimization

Design a system to train a deep learning model on a large dataset.

Why This Question?: This tests your ability to design scalable and efficient systems, which is critical for NVIDIA’s large-scale AI projects.

Detailed Answer:

Key Components:

Data Pipeline:

Use distributed storage (e.g., AWS S3, Google Cloud Storage) to store large datasets.

Implement data loaders (e.g., TensorFlow Dataset API, PyTorch DataLoader) to efficiently load and preprocess data.

Distributed Training:

Use frameworks like Horovod or PyTorch Distributed to split the workload across multiple GPUs or nodes.

Implement data parallelism (split data across devices) or model parallelism (split model across devices).

Hardware:

Leverage NVIDIA DGX systems for high-performance training.

Use GPUs with large memory (e.g., A100) to handle large batch sizes.

Monitoring and Logging:

Use tools like TensorBoard or Weights & Biases to monitor training progress.

Log metrics (e.g., loss, accuracy) and visualize them in real-time.

Example: Training a ResNet-50 model on ImageNet using 8 GPUs with Horovod.

Pro Tip: Optimize data preprocessing using NVIDIA’s DALI library to reduce bottlenecks.

How would you optimize a model for inference on edge devices?

Why This Question?: NVIDIA is a leader in edge AI, and this question tests your ability to optimize models for real-world applications.

Detailed Answer:

Optimization Techniques:

Quantization:

Reduce precision (e.g., FP32 to INT8) to speed up inference and reduce memory usage.

Use tools like TensorRT for post-training quantization.

Pruning:

Remove unnecessary weights or neurons to reduce model size.

Use techniques like magnitude-based pruning or lottery ticket hypothesis.

Knowledge Distillation:

Train a smaller model (student) to mimic a larger model (teacher).

Model Compression:

Use techniques like weight sharing or low-rank factorization.

Example: Optimizing a YOLO model for object detection on NVIDIA Jetson devices.

Pro Tip: Experiment with NVIDIA’s Jetson platform for edge AI development.

What is model quantization, and why is it useful?

Why This Question?: Quantization is key for optimizing models for deployment, especially on resource-constrained devices.

Detailed Answer:

Quantization involves reducing the precision of model weights and activations (e.g., from 32-bit floating-point to 8-bit integers).

Why It’s Useful:

Faster Inference: Lower precision computations are faster.

Reduced Memory Usage: Smaller models require less memory, making them suitable for edge devices.

Lower Power Consumption: Efficient computations reduce energy usage.

Types of Quantization:

Post-Training Quantization: Quantize a pre-trained model without retraining.

Quantization-Aware Training: Simulate quantization during training to improve accuracy.

Example: Quantizing a BERT model for NLP tasks using TensorRT.

Pro Tip: NVIDIA’s TensorRT supports quantization for efficient inference.

How would you handle imbalanced data in a classification problem?

Why This Question?: This tests your ability to handle real-world data challenges.

Detailed Answer:

Imbalanced Data occurs when one class is significantly underrepresented (e.g., fraud detection).

Techniques:

Resampling:

Oversampling: Increase the number of minority class samples (e.g., SMOTE).

Undersampling: Reduce the number of majority class samples.

Class Weighting: Assign higher weights to minority class samples during training.

Data Augmentation: Generate synthetic samples for the minority class.

Ensemble Methods: Use techniques like bagging or boosting to improve performance.

Evaluation Metrics:

Use metrics like F1-score, AUC-ROC, or precision-recall curve instead of accuracy.

Example: Handling imbalanced data in a medical diagnosis dataset.

Pro Tip: Use libraries like imbalanced-learn for resampling techniques.

What is distributed training, and how does it work?

Why This Question?: NVIDIA is a leader in distributed computing, and this question tests your understanding of large-scale training.

Detailed Answer:

Distributed Training involves splitting the workload across multiple GPUs or nodes to speed up training.

Approaches:

Data Parallelism:

Split the dataset across devices.

Each device computes gradients on a subset of the data and synchronizes with others.

Model Parallelism:

Split the model across devices.

Each device computes a portion of the model.

Frameworks:

Horovod: A distributed training framework that works with TensorFlow, PyTorch, and others.

PyTorch Distributed: Native support for distributed training in PyTorch.

Example: Training a GPT-3 model on 1,000 GPUs using data parallelism.

Pro Tip: Use NVIDIA’s NCCL library for efficient communication between GPUs.

Category 5: Behavioral and Situational Questions

Tell me about a time you faced a challenging technical problem.

Why This Question?: This tests your problem-solving skills and resilience.

Detailed Answer:

Use the STAR method (Situation, Task, Action, Result) to structure your response.

Example:

Situation: While working on a computer vision project, I encountered a bug in the model’s training loop.

Task: Debug the issue and improve model accuracy.

Action: I isolated the problem by analyzing the loss curves and consulting documentation. I discovered that the learning rate was too high.

Result: After adjusting the learning rate, the model’s accuracy improved by 15%.

Pro Tip: Align your answer with NVIDIA’s values, such as innovation and collaboration.

How do you stay updated with the latest advancements in AI/ML?

Why This Question?: NVIDIA values candidates who are passionate about learning and staying ahead of the curve.

Detailed Answer:

Resources:

Research Papers: Read papers on arXiv, NeurIPS, or CVPR.

Blogs: Follow NVIDIA Developer Blog, Towards Data Science, or Distill.

Conferences: Attend NVIDIA GTC, CVPR, or ICML.

Online Courses: Take courses on Coursera, edX, or Fast.ai.

Example: “I recently read a paper on transformer models and implemented a simplified version for a personal project.”

Pro Tip: Highlight your participation in NVIDIA’s developer programs or open-source projects.

Describe a project where you applied machine learning to solve a real-world problem.

Why This Question?: This tests your practical experience and ability to apply ML.

Detailed Answer:

Use the STAR method to structure your response.

Example:

Situation: I worked on a project to predict customer churn for a telecom company.

Task: Build a model to identify customers at risk of leaving.

Action: I preprocessed the data, engineered features, and trained an XGBoost model.

Result: The model achieved 85% accuracy and helped reduce churn by 20%.

Pro Tip: Use metrics to quantify the impact (e.g., improved accuracy by 20%).

How do you handle tight deadlines and competing priorities?

Why This Question?: This tests your time management and prioritization skills.

Detailed Answer:

Approach:

Prioritize Tasks: Identify high-impact tasks and focus on them first.

Communicate: Keep stakeholders informed about progress and challenges.

Stay Organized: Use tools like Trello or Jira to manage tasks.

Example: “During a project, I had to deliver a model while also preparing a presentation. I prioritized the model and delegated parts of the presentation to a teammate.”

Pro Tip: Provide a specific example from your experience.

Why do you want to work at NVIDIA?

Why This Question?: This tests your motivation and alignment with NVIDIA’s mission.

Detailed Answer:

Key Points:

Innovation: Highlight NVIDIA’s impact on AI/ML and cutting-edge projects.

Culture: Mention NVIDIA’s collaborative and innovative culture.

Career Growth: Talk about opportunities for learning and advancement.

Example: “I’m inspired by NVIDIA’s work in AI and want to contribute to projects that push the boundaries of what’s possible. I’m particularly excited about working with CUDA and TensorRT to optimize deep learning models.”

Pro Tip: Mention specific projects or technologies that excite you.

6. Tips to Ace NVIDIA ML Interviews

Master NVIDIA’s Tech Stack: Be proficient in CUDA, TensorRT, and other NVIDIA tools.

Practice Coding: Solve algorithmic problems on platforms like LeetCode and HackerRank.

Showcase Real-World Experience: Highlight projects where you’ve applied ML to solve complex problems.

Ask Insightful Questions: Demonstrate your curiosity about NVIDIA’s work and mission.

Leverage InterviewNode: Use our platform to practice mock interviews and get expert feedback.

7. Conclusion

Cracking an NVIDIA ML interview is challenging but achievable with the right preparation. By mastering the top 25 questions covered in this blog and leveraging InterviewNode’s resources, you’ll be well on your way to landing your dream job at NVIDIA.

Ready to take the next step? Sign up for InterviewNode today and start your journey toward acing your NVIDIA ML interview!

8. FAQs

Q1: How long does it take to prepare for an NVIDIA ML interview?

A: It depends on your current skill level, but we recommend at least 2-3 months of focused preparation.

Q2: What are the most important skills for NVIDIA ML roles?

A: Strong fundamentals in ML, deep learning, and programming, along with experience in NVIDIA’s tech stack.

Q3: How can InterviewNode help me prepare?

A: InterviewNode offers personalized mock interviews, curated question banks, expert guidance, and comprehensive learning resources tailored to NVIDIA’s interview process.

Good luck with your NVIDIA ML interview! Register for our free webinar to know more about how Interview Node could help you succeed.